基于Redis6.2.8版本部署Redis Cluster集群

1、操作前准备,环境规划:

| IP | 主机名 | 端口号 | 节点 |

|---|---|---|---|

| 192.168.238.102 | redis-cluster | 6701 | master |

| 192.168.238.102 | redis-cluster | 6702 | slave—>6705的master |

| 192.168.238.102 | redis-cluster | 6703 | master |

| 192.168.238.102 | redis-cluster | 6704 | slave—>6701的master |

| 192.168.238.102 | redis-cluster | 6705 | master |

| 192.168.238.102 | redis-cluster | 6706 | slave—>6703的master |

#安装编译等所需的组件 yum -y install gcc gcc-c++

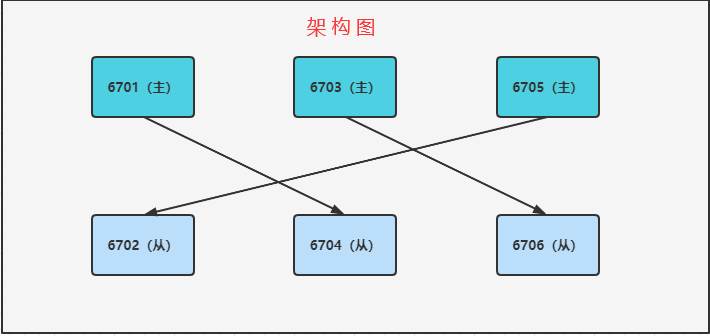

架构图:

Reids集群采用三主三从交叉复制架构,由于服务器数量有限,在一台机器中模拟出集群的效果,在实际生产环境中,需要准备三台机器,每台机器中分别部署两台Redis节点,一主一从,交叉备份。

2、安装配置redis程序

2.1 安装redis6.2.8

1.下载reids mkdir -p /root/soft cd /root/soft wget https://download.redis.io/releases/redis-6.2.8.tar.gz 2.解压并安装redis tar xf redis-6.2.8.tar.gz cd redis-6.2.8 make

2.2 创建Reids Cluster集群目录

1.创建Redis Cluster各集群节点的配置文件存放路径

cd /opt

mkdir data

ln -s /opt/data /data

mkdir /data/redis-6.2.8-cluster/{bin,conf,data,var,logs} -p

ll /data/redis-6.2.8-cluster/{bin,conf,data,var,logs}

#目录说明

bin #可执行文件

conf #配置文件

data #数据文件

log #日志文件

var #pid文件2.准备redis可执行命令 cd /root/soft/redis-6.2.8 cp src/redis-benchmark /data/redis-6.2.8-cluster/bin cp src/redis-check-aof /data/redis-6.2.8-cluster/bin cp src/redis-check-rdb /data/redis-6.2.8-cluster/bin cp src/redis-cli /data/redis-6.2.8-cluster/bin cp src/redis-sentinel /data/redis-6.2.8-cluster/bin cp src/redis-server /data/redis-6.2.8-cluster/bin cp src/redis-trib.rb /data/redis-6.2.8-cluster/bin

#检查 [root@test102 redis-6.2.8]# tree /data/redis-6.2.8-cluster /data/redis-6.2.8-cluster ├── bin │ ├── redis-benchmark │ ├── redis-check-aof │ ├── redis-check-rdb │ ├── redis-cli │ ├── redis-sentinel │ ├── redis-server │ └── redis-trib.rb ├── conf ├── data ├── logs └── var

3.配置Redis Cluster三主三从交叉复制集群

3.1.准备六个节点的redis配置文件

redis6.2.8版本配置文件所有内容如下,主要配置带注释的行。(此配置文件参考,不用添加配置)

bind 0.0.0.0

protected-mode no #关闭保护模式

port 6701 #端口号

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize yes #后台运行

pidfile /data/redis-6.2.8-cluster/var/redis_6701.pid #pid存放

loglevel notice

logfile /data/redis-6.2.8-cluster/logs/redis_6701.log #日志存放路径

databases 16

always-show-logo yes #是否显示总日志

set-proc-title yes

proc-title-template "{title} {listen-addr} {server-mode}"

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename redis_6701.rdb #持久化数据文件名称

rdb-del-sync-files no

dir /data/redis-6.2.8-cluster/data #持久化数据文件存放路径

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-diskless-load disabled

repl-disable-tcp-nodelay no

replica-priority 100

acllog-max-len 128

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

lazyfree-lazy-user-del no

lazyfree-lazy-user-flush no

oom-score-adj no

oom-score-adj-values 0 200 800

disable-thp yes

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lua-time-limit 5000

cluster-enabled yes #开启集群模式

cluster-config-file nodes_6701.conf #集群模式配置文件名称

cluster-node-timeout 15000 #集群超时时间

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

jemalloc-bg-thread yes0.复制二进制中的配置文件(复不复制都可以)

cp redis.conf /data/redis-6.2.8-cluster/conf/ cd /data/redis-6.2.8-cluster/conf/

1.Reids 6701节点配置文件内容

cat > redis_6701.conf << EOF bind 0.0.0.0 protected-mode no port 6701 daemonize yes pidfile /data/redis-6.2.8-cluster/var/redis_6701.pid logfile /data/redis-6.2.8-cluster/logs/redis_6701.log always-show-logo yes dbfilename redis_6701.rdb dir /data/redis-6.2.8-cluster/data cluster-enabled yes cluster-config-file nodes_6701.conf cluster-node-timeout 15000 EOF

2.Reids 6702节点配置文件内容

cat > redis_6702.conf << EOF bind 0.0.0.0 protected-mode no port 6702 daemonize yes pidfile /data/redis-6.2.8-cluster/var/redis_6702.pid logfile /data/redis-6.2.8-cluster/logs/redis_6702.log always-show-logo yes dbfilename redis_6702.rdb dir /data/redis-6.2.8-cluster/data cluster-enabled yes cluster-config-file nodes_6702.conf cluster-node-timeout 15000 EOF

3.Reids 6703节点配置文件内容

cat > redis_6703.conf << EOF bind 0.0.0.0 protected-mode no port 6703 daemonize yes pidfile /data/redis-6.2.8-cluster/var/redis_6703.pid logfile /data/redis-6.2.8-cluster/logs/redis_6703.log always-show-logo yes dbfilename redis_6703.rdb dir /data/redis-6.2.8-cluster/data cluster-enabled yes cluster-config-file nodes_6703.conf cluster-node-timeout 15000 EOF

4.Reids 6704节点配置文件内容

cat > redis_6704.conf << EOF bind 0.0.0.0 protected-mode no port 6704 daemonize yes pidfile /data/redis-6.2.8-cluster/var/redis_6704.pid logfile /data/redis-6.2.8-cluster/logs/redis_6704.log always-show-logo yes dbfilename redis_6704.rdb dir /data/redis-6.2.8-cluster/data cluster-enabled yes cluster-config-file nodes_6704.conf cluster-node-timeout 15000 EOF

5.Reids 6705节点配置文件内容

cat > redis_6705.conf << EOF bind 0.0.0.0 protected-mode no port 6705 daemonize yes pidfile /data/redis-6.2.8-cluster/var/redis_6705.pid logfile /data/redis-6.2.8-cluster/logs/redis_6705.log always-show-logo yes dbfilename redis_6705.rdb dir /data/redis-6.2.8-cluster/data cluster-enabled yes cluster-config-file nodes_6705.conf cluster-node-timeout 15000 EOF

6.Reids 6706节点配置文件内容

cat > redis_6706.conf << EOF bind 0.0.0.0 protected-mode no port 6706 daemonize yes pidfile /data/redis-6.2.8-cluster/var/redis_6706.pid logfile /data/redis-6.2.8-cluster/logs/redis_6706.log always-show-logo yes dbfilename redis_6706.rdb dir /data/redis-6.2.8-cluster/data cluster-enabled yes cluster-config-file nodes_6706.conf cluster-node-timeout 15000 EOF

3.2.将六个节点全部启动

1)启动redis各个节点

/data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6701.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6702.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6703.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6704.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6705.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6706.conf

2)查看各个节点生成的文件和进程

#检查各个节点生成的文件

ll /data/redis-6.2.8-cluster/{conf,data,logs,var}

#检查进程

ps -ef | grep redis3.3.配置集群节点之间相互发现,在任意一个节点中操作即可

[root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6701 127.0.0.1:6701> CLUSTER MEET 192.168.238.102 6702 127.0.0.1:6701> CLUSTER MEET 192.168.238.102 6703 127.0.0.1:6701> CLUSTER MEET 192.168.238.102 6704 127.0.0.1:6701> CLUSTER MEET 192.168.238.102 6705 127.0.0.1:6701> CLUSTER MEET 192.168.238.102 6706 127.0.0.1:6701> CLUSTER NODES 4bc28ec0219909d076684806bae49466c5c1b968 192.168.238.102:6701@16701 myself,master - 0 1671008944000 1 connected aeb0247ff39f538ddb5e31f9e4b1b59f39595b24 192.168.238.102:6703@16703 master - 0 1671008943216 2 connected 33b69af58c1ca1044dee5f9b26acacf8c2088e57 192.168.238.102:6706@16706 master - 0 1671008942190 5 connected d41f907ccbf268ef61a836cbe45dd2bebe8cbf04 192.168.238.102:6702@16702 master - 0 1671008945257 0 connected a90ae4d192031529dae51bc3f5ef294a1fa5e4f6 192.168.238.102:6705@16705 master - 0 1671008944235 4 connected 4fedc9a75539ba1cefdf19abc3163d090c21e83a 192.168.238.102:6704@16704 master - 0 1671008943000 3 connected

操作完成后会同步各节点的集群配置文件中

cat /data/redis-6.2.8-cluster/data/nodes_670*

3.4.为集群中的充当Master的节点分配槽位

1)分配槽位

/data/redis-6.2.8-cluster/bin/redis-cli -p 6701 cluster addslots {0..5461}

/data/redis-6.2.8-cluster/bin/redis-cli -p 6703 cluster addslots {5462..10922}

/data/redis-6.2.8-cluster/bin/redis-cli -p 6705 cluster addslots {10923..16383}2)查看集群状态

分配完槽位之后集群的状态就会处于ok。

[root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6701 127.0.0.1:6701> cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:5 cluster_my_epoch:1 cluster_stats_messages_ping_sent:242 cluster_stats_messages_pong_sent:240 cluster_stats_messages_meet_sent:5 cluster_stats_messages_sent:487 cluster_stats_messages_ping_received:240 cluster_stats_messages_pong_received:247 cluster_stats_messages_received:487

3)查看集群中的节点信息

虽然现在槽位已经分配完毕,但是当下集群中所有的节点都处于Master角色,还是无法使用。

127.0.0.1:6701> cluster nodes 4bc28ec0219909d076684806bae49466c5c1b968 192.168.238.102:6701@16701 myself,master - 0 1671009232000 1 connected 0-5461 aeb0247ff39f538ddb5e31f9e4b1b59f39595b24 192.168.238.102:6703@16703 master - 0 1671009236569 2 connected 5462-10922 33b69af58c1ca1044dee5f9b26acacf8c2088e57 192.168.238.102:6706@16706 master - 0 1671009236000 5 connected d41f907ccbf268ef61a836cbe45dd2bebe8cbf04 192.168.238.102:6702@16702 master - 0 1671009236000 0 connected a90ae4d192031529dae51bc3f5ef294a1fa5e4f6 192.168.238.102:6705@16705 master - 0 1671009237582 4 connected 10923-16383 4fedc9a75539ba1cefdf19abc3163d090c21e83a 192.168.238.102:6704@16704 master - 0 1671009234000 3 connected

3.5.配置三主三从交叉复制模式

1)获取集群主节点的信息

[root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6701 cluster nodes | egrep '6701|6703|6705' | awk '{print $1,$2}'

4bc28ec0219909d076684806bae49466c5c1b968 192.168.238.102:6701@16701

aeb0247ff39f538ddb5e31f9e4b1b59f39595b24 192.168.238.102:6703@16703

a90ae4d192031529dae51bc3f5ef294a1fa5e4f6 192.168.238.102:6705@167052)配置交叉复制,登录从库

1.配置6702节点复制6705主节点,充当6705 master节点的slave [root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6702 127.0.0.1:6702> CLUSTER REPLICATE a90ae4d192031529dae51bc3f5ef294a1fa5e4f6 OK 2.配置6704节点复制6701主节点,充当6701 master节点的slave [root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6704 127.0.0.1:6704> CLUSTER REPLICATE 4bc28ec0219909d076684806bae49466c5c1b968 OK 3.配置6706节点复制6703主节点,充当6703 master节点的slave [root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6706 127.0.0.1:6706> CLUSTER REPLICATE aeb0247ff39f538ddb5e31f9e4b1b59f39595b24 OK

3)查看集群节点信息

已经是三主三从交叉复制集群模式了。

[root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6701 127.0.0.1:6701> CLUSTER NODES 4bc28ec0219909d076684806bae49466c5c1b968 192.168.238.102:6701@16701 myself,master - 0 1671010100000 1 connected 0-5461 aeb0247ff39f538ddb5e31f9e4b1b59f39595b24 192.168.238.102:6703@16703 master - 0 1671010104000 2 connected 5462-10922 33b69af58c1ca1044dee5f9b26acacf8c2088e57 192.168.238.102:6706@16706 slave aeb0247ff39f538ddb5e31f9e4b1b59f39595b24 0 1671010103351 2 connected d41f907ccbf268ef61a836cbe45dd2bebe8cbf04 192.168.238.102:6702@16702 slave a90ae4d192031529dae51bc3f5ef294a1fa5e4f6 0 1671010104371 4 connected a90ae4d192031529dae51bc3f5ef294a1fa5e4f6 192.168.238.102:6705@16705 master - 0 1671010101313 4 connected 10923-16383 4fedc9a75539ba1cefdf19abc3163d090c21e83a 192.168.238.102:6704@16704 slave 4bc28ec0219909d076684806bae49466c5c1b968 0 1671010103000 1 connected

4)测试

#参数后面需要加-c [root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli -p 6701 -c 127.0.0.1:6701> set k1 v1 -> Redirected to slot [12706] located at 192.168.238.102:6705 OK 192.168.238.102:6705> set k2 v2 -> Redirected to slot [449] located at 192.168.238.102:6701 OK 192.168.238.102:6705> set k3 v3 -> Redirected to slot [4576] located at 192.168.238.102:6701 OK 192.168.238.102:6701> set k4 v4 -> Redirected to slot [8455] located at 192.168.238.102:6703 OK 192.168.238.102:6703> 192.168.238.102:6703> get k4 "v4"

4.快速搭建Redis Cluster集群

前提条件需要先将6个节点搭建起来,因为我们前面搭建好,执行会报如下错误。

[root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli --cluster create 192.168.238.102:6701 192.168.238.102:6702 192.168.238.102:6703 192.168.238.102:6704 192.168.238.102:6705 192.168.238.102:6706 --cluster-replicas 1 [ERR] Node 192.168.238.102:6701 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0.

#确保是测试环境,没有数据 pkill redis ps -ef|grep redis cd /data/redis-6.2.8-cluster/data rm *.rdb rm *.conf 在重新启动redis /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6701.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6702.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6703.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6704.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6705.conf /data/redis-6.2.8-cluster/bin/redis-server /data/redis-6.2.8-cluster/conf/redis_6706.conf ps -ef|grep redis

#再执行,输入yes执行完成 #--cluster起到的作用就是省掉了节点发现、分配槽位、交叉复制的过程,自动替我们分配。 [root@redis-cluster ~]# /data/redis-6.2.8-cluster/bin/redis-cli --cluster create 192.168.238.102:6701 192.168.238.102:6702 192.168.238.102:6703 192.168.238.102:6704 192.168.238.102:6705 192.168.238.102:6706 --cluster-replicas 1 >>> Performing hash slots allocation on 6 nodes... Master[0] -> Slots 0 - 5460 Master[1] -> Slots 5461 - 10922 Master[2] -> Slots 10923 - 16383 Adding replica 192.168.238.102:6705 to 192.168.238.102:6701 Adding replica 192.168.238.102:6706 to 192.168.238.102:6702 Adding replica 192.168.238.102:6704 to 192.168.238.102:6703 >>> Trying to optimize slaves allocation for anti-affinity [WARNING] Some slaves are in the same host as their master M: 5255734567b27a74f348b775eca83ff3e315457e 192.168.238.102:6701 slots:[0-5460] (5461 slots) master M: d2f58242287d38c5d8df1761d662c9bebe5c4d75 192.168.238.102:6702 slots:[5461-10922] (5462 slots) master M: 191cb9db4d9ec21ff52f812294ea5d588ec549bf 192.168.238.102:6703 slots:[10923-16383] (5461 slots) master S: 12473abd3e9613fba3b338a0a01a74b56bad55c9 192.168.238.102:6704 replicates 5255734567b27a74f348b775eca83ff3e315457e S: ff6751b74a32b2a28293b627354cb2fb30b4fdfa 192.168.238.102:6705 replicates d2f58242287d38c5d8df1761d662c9bebe5c4d75 S: d47c1903030a1238494f55968f30270cd0235439 192.168.238.102:6706 replicates 191cb9db4d9ec21ff52f812294ea5d588ec549bf Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join >>> Performing Cluster Check (using node 192.168.238.102:6701) M: 5255734567b27a74f348b775eca83ff3e315457e 192.168.238.102:6701 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: 12473abd3e9613fba3b338a0a01a74b56bad55c9 192.168.238.102:6704 slots: (0 slots) slave replicates 5255734567b27a74f348b775eca83ff3e315457e S: d47c1903030a1238494f55968f30270cd0235439 192.168.238.102:6706 slots: (0 slots) slave replicates 191cb9db4d9ec21ff52f812294ea5d588ec549bf S: ff6751b74a32b2a28293b627354cb2fb30b4fdfa 192.168.238.102:6705 slots: (0 slots) slave replicates d2f58242287d38c5d8df1761d662c9bebe5c4d75 M: d2f58242287d38c5d8df1761d662c9bebe5c4d75 192.168.238.102:6702 slots:[5461-10922] (5462 slots) master 1 additional replica(s) M: 191cb9db4d9ec21ff52f812294ea5d588ec549bf 192.168.238.102:6703 slots:[10923-16383] (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.